Docker CI / CD - Github Actions

Manually running builds, executing tests and deploying can become a nightmare and an error-prone process: Human errors easily slip in. As soon as the project grows, build processes tend to get more an

What if:

- Builds could be automated, including getting green flags indicators (e.g. running tests)

- Build processes could be triggered whenever a merge to a given branch is done or a push to server.

- Each build would generate a complete Docker image container, so we don't have to worry about server pollution or not having the exact versions of software installed.

- It won't take up much space since we will start from a previous Docker image that has got all the S.O. and software preinstalled.

- We can upload it to a cloud hub registry, allowing the cloud hub to consume it from any local or cloud provider (Amazon, Azure, Google Cloud...)

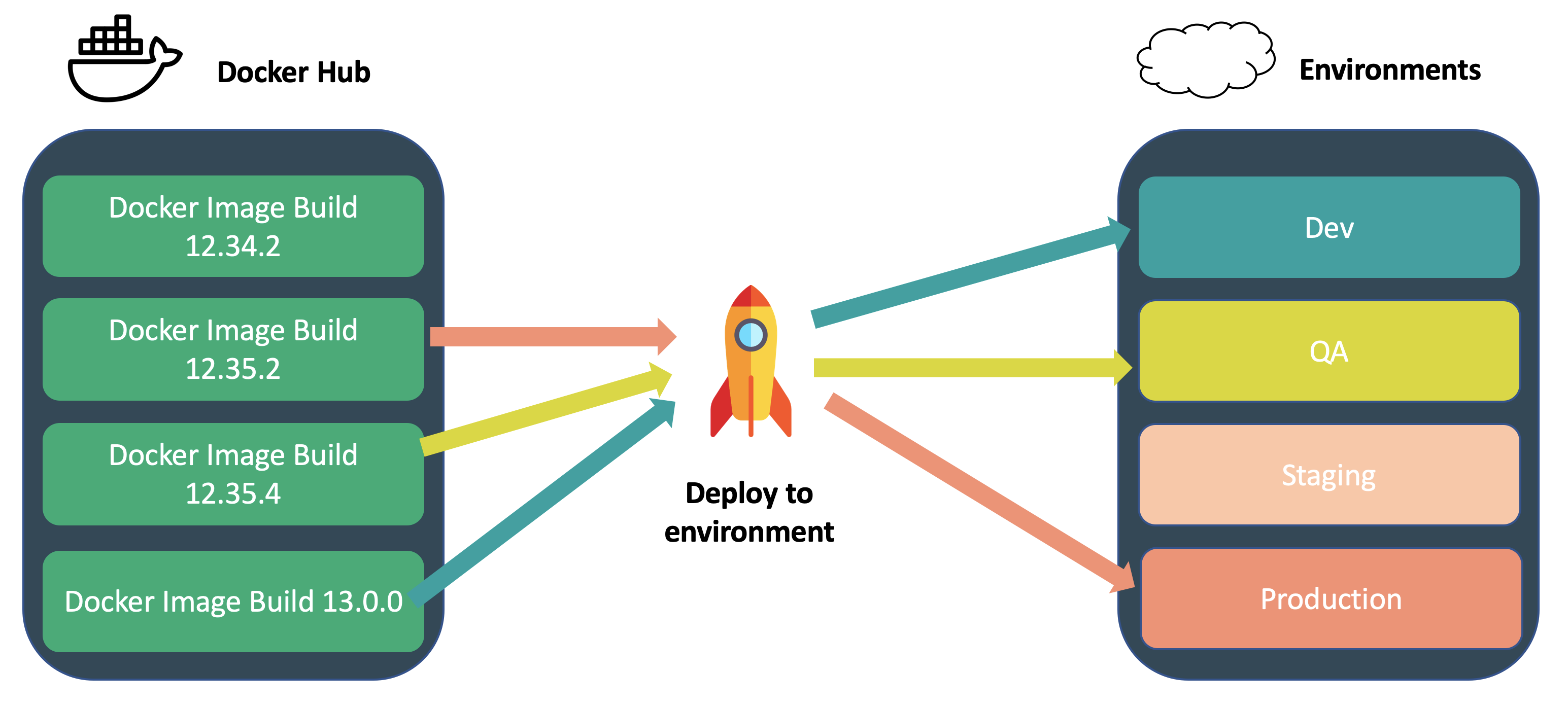

- You could easily swap different build versions.

That's the benefit you get of configuring a CI/CD server (in this post we will use Github Actions) and mixing it up with Docker container technology.

This is the third post of the Hello Docker series, the first and second posts.

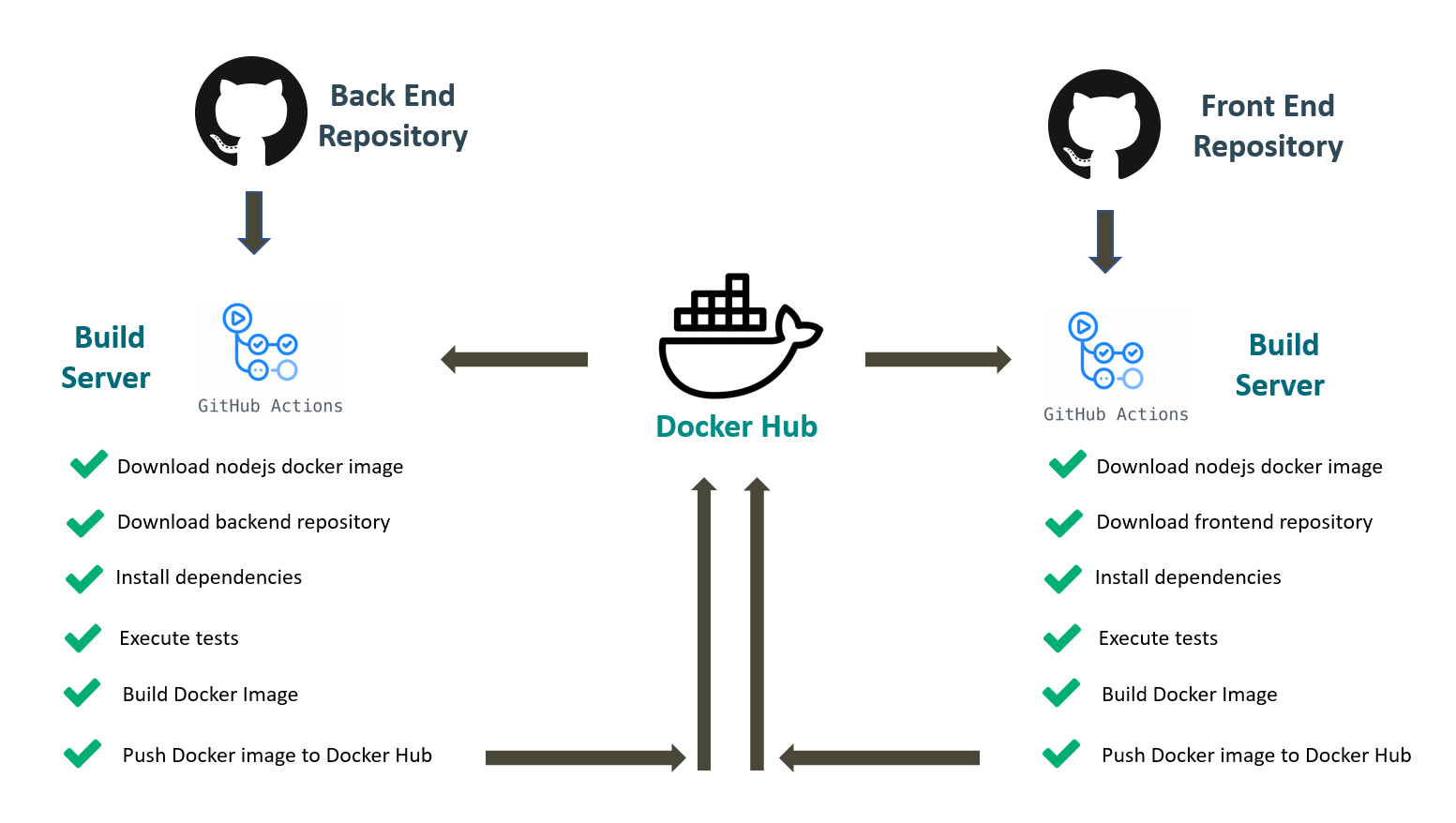

In this post we will use Github Actions to automatically trigger the following processes on every merge to master or pull request:

- Fire up a clean linux + nodejs instance.

- Download the repository source code.

- Install the project dependencies.

- Execute the associated unit tests.

- Generate a docker image including the production build.

- Tag it and Publish it into the Docker Hub registry.

We will configure this for both a Front End project and a Back End project.

If you want to dig into the details, keep on reading :)

Agenda

Steps that we are going to follow:

- We will present the sample project.

- We will work manually with Docker hub.

- We will link our github project to Github Actions.

- We will setup a basic CI step

- We will check our unit tests.

- We will check if the docker container can be built.

- We will upload a container to docker hub registry (including build number).

- We will check if our Continuous Delivery process succeeded by consuming those images from our docker compose file configuration.

Sample project

A Chat app will be taken as an example. The application is split into two parts: client (Front End) and server (Back End), which will be containerized using Docker and deployed using Docker Containers.

We already have a couple of repositories that will create a chat application together.

Following our actual deployment approach (check first post in this series), a third part will be included as well: a load balancer - its responsibility will be to route the traffic to the front or back depending on the requesting url. The load balancer will also be containerized and deployed using a Docker Container.

Docker hub

In our last post we took an Ubuntu + Nodejs Docker image as our starting point; it was great to retrieve it from Docker Image and to have control of which version you're downloading.

Wouldn't it be cool to be able to push our own images to that Docker Hub Registry including versioning? That's what Docker Hub offers you: you can create your own account and upload your Docker Images there.

Advantages of using Docker Hub:

- You can maintain several versions of your container images (great to keep different environments, A/B testing, canary deployment, green blue deployment, rolling back, ...).

- Since Docker is based on a small layer foot print our custom images won't take up much size, e.g. a given container built on top of a linux container won't ship the OS, it will only point to that previous image).

- Your container image is already in the cloud, so deploying it to cloud provider is just a piece of cake.

Docker Hub is great to get started: you can create an account for free and upload your docker images (free version has a restriction: you get unlimited public repositories and one private repository).

If later on you need to use it for your business purposes and keep and restrict the access, you can use a private Docker registry, some providers:

- Docker Hub Enterprise.

- Quay.io

- Amazon ECR

- Azure ACR

- Google Cloud.

- Gitlab.

- ...

Github Actions

Although Docker helps us standardize the creation of a given environment and configuration, building new releases manually can become a tedious and error-prone process:

- We have to download the right cut of code to be built.

- We have to launch all the automated tests and check if they are passing.

- We have to generate our Docker image.

- We have to add some proper versioning (tagging in Docker terms).

- We have to manually push it to Docker Hub registry.

Imagine doing that manually on every merge to master; you will get sick of this deployment hell... Is there any automated way to do that? Github Actions to the rescue!

Just by spending some time creating an initial configuration, Github Actions will automatically:

- Get notified of any PR or merge to master being triggered (you can configure policies).

- Create a clean environment (e.g. in our case it takes an Ubuntu + Nodejs image).

- Download the right branch cut from the repository.

- Execute the tests.

- Create the docker container image that will contain the production build.

- On success deploy it to Docker Hub registry (adding a version tag).

One of the advantages of Github Actions is that it's quite easy to setup:

- You don't need to install any infrastructure (it's just cloud base).

- The main configuration is done via a yml file.

- It offers a community edition where you can play with your tests projects or use it for your open source projects (only public projects).

- It offers an enterprise version for your private projects.

Accounts setup

Forking sample projects

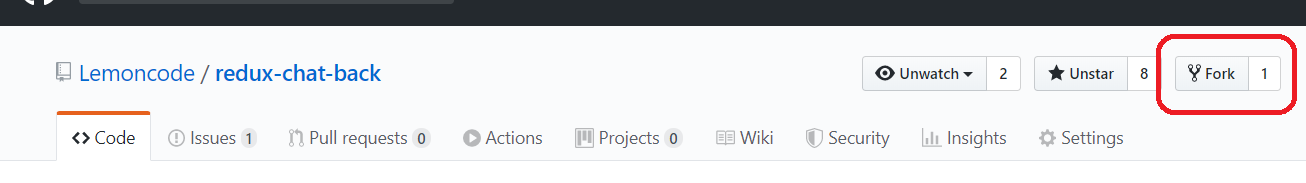

If you want to follow this tutorial you can start by forking the Front End and Back End repos:

- Front End: https://github.com/Lemoncode/container-chat-front-example

- Back End: https://github.com/Lemoncode/container-chat-back-example

By forking these repos, a copy will be created in your github account and you will be able to link them to your Github Actions and setup the CI process.

Docker Hub signup

In our previous post from this series we consumed an image container from Docker Hub. If we want to upload our own image containers to the hub (free for public images), we need to create an account, which you can do in the following link.

Let's get started

In this tutorial, we will be applying automation to our forked chat application's repositories using a Github action workflow. Github Actions will launch a task after every commit where the following tasks will be executed:

- Tests will be run.

- The application will be containerized using Docker.

- The created image will be pushed to a Docker Registry, Docker Hub in our case.

Manually uploading an image to Docker Hub

Before we start automating stuff, let's give the manual process a try.

Once you have your Docker Hub account, you can interact with it from your shell (open your bash bash terminal, or windows cmd).

You can log into to Docker Hub.

$ docker login

In order to push your images, they have to be tagged according to the following pattern:

<Docker Hub user name>/<name of the image>:<version>

The version is optional. If none is specified, latest will be assigned.

$ docker tag front <Docker Hub user name>/front

and finally push it

$ docker push <Docker Hub user name>/front

From now on, the image will be available for everyone's use.

$ docker pull <Docker Hub user name>/front

To create the rest of the images that we need, we have to follow the exact same steps.

This can become a tedious and error-prone process. In the following steps we will learn how to automate this using Github Actions CI/CD.

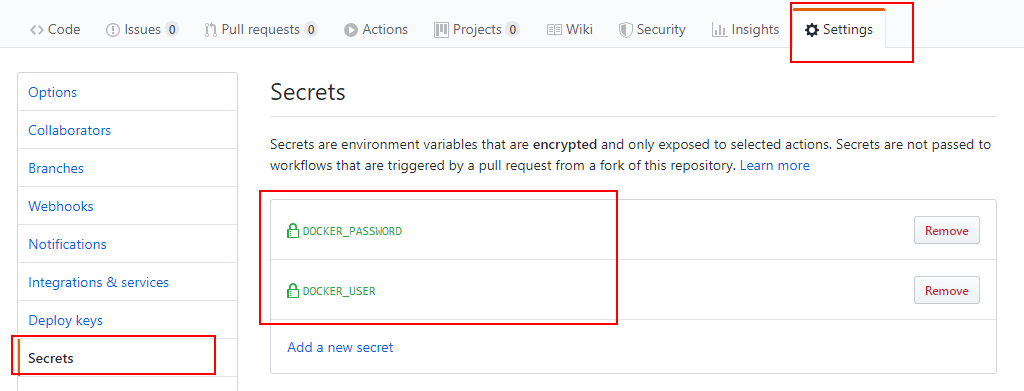

Linking Docker Hub credentials

Once they are forked, you need to enter in each of the project settings (Back and Front) your Docker Hub user and password as environment variables. This action has to be done in both repositories.

Front

Back

These variables will be used later to log into Docker Hub (note down: the first time you enter the data in these environment variables, they are shown as clear text. Once you set them up, they are shown as a password field).

Now we can finally begin to automate our tasks.

Github Actions configuration

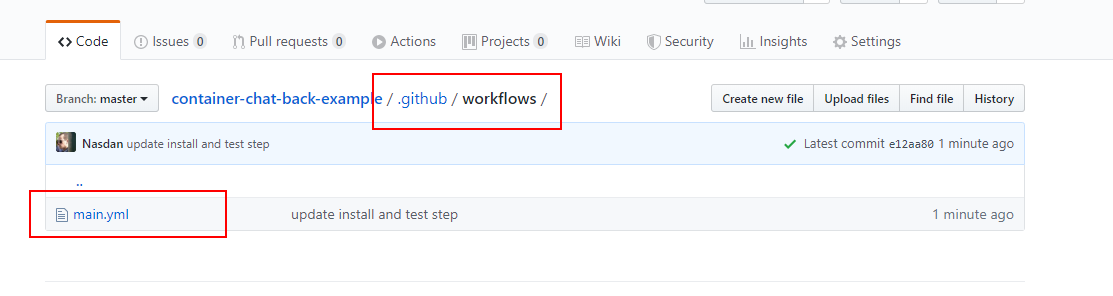

To configure Github Actions, we need to create a file named main.yml in the .github/workflows folder of your project. This is where we'll describe the actions that will be executed by Github Actions. We could have as many yml files as we want.

Back End

The steps to create the yml file for the back end application are the following:

- Name the workflow to be created: in this case we are going to choose Backend Chant CI/CD.

- Define event triggers: indicate Github when to run specific workflow.

- Select OS: select OS where we want to run our app.

- Checkout repository: getting access to all files in repository.

- Setup node: install nodejs with an specific version.

- Install Dependencies: like in a local environment, we can just execute npm install.

- Test Application: in our case we will run the unit tests that have been implemented for the application.

- Log into Docker Hub: before pushing an image to the Docker Hub Registry we need to login into Docker Hub.

- Build Docker Image: if tests pass, we just create the container image (this will search for a Dockerfile file at the root of your repository and follow the instructions from that file to create a production build, storing it in a Docker image container).

- Tag Docker Images: We need to identify the container image with a given tag.

- Push Docker Images: push the generated image to the Docker Hub registry.

A summary of this build process:

1. Name the workflow to be created

Let's create our main.yml file at the .github/workflows folder of our backend repository:

We will start by indicating the workflow name.

./.github/workflows/main.yml

+ name: Backend Chat CI/CD

2. Define event triggers

We can trigger this workflow on several events like push, pull_request, etc.

In this case we are going to launch the CI/CD process whenever there is a push to master, or Pull Request pointing to master.

./.github/workflows/main.yml

name: Backend Chat CI/CD+ on:+ push:+ branches:+ - master+ pull_request:+ branches:+ - master

3. Select OS

After that, we can create different jobs for build, deploy, etc. We will start with the ci job (build and tests) and select OS (Operating System) that all tests will run under. Here you have the list of available OS.

In this case we will choose a linux instance (Ubuntu).

./.github/workflows/main.yml

name: Backend Chat CI/CDon:push:branches:- masterpull_request:branches:- master+ jobs:+ ci:+ runs-on: ubuntu-latest

4. Checkout repository

We need access to all files in repository so we need clone the repository in this environment. Instead of manually defining all steps to clone it from scratch we can use an action already created, that is, we have available official actions from Github teams or other companies in the Github Marketplace. In this case, we will use action checkout to checkout our repository from Github team (download repository to a given folder):

./.github/workflows/main.yml

...jobs:ci:runs-on: ubuntu-latest+ steps:+ - uses: actions/checkout@v1

5. Setup node

We will make use of another action in order to setup-node. this action developed by the Github team will allow us to get up and running the nodejs environment (even specifying a version):

In this case we are going to request nodejs version 12.x.

./.github/workflows/main.yml

...steps:- uses: actions/checkout@v1+ - uses: actions/setup-node@v1+ with:+ node-version: '12.x'

6. Install dependencies

So we've got our ubuntu + nodejs machine up and running. With the checkout action we have already downloaded our project source code from the repository, so now it's time to execute an npm install before we start running the tests.

To execute it, we create a new step with name Install and we execute the command npm install in the run section.

./.github/workflows/main.yml

...steps:- uses: actions/checkout@v1- uses: actions/setup-node@v1with:node-version: '12.x'+ - name: Install+ run: npm install

7. Test Application

All the plumbing is ready, so now we can add a npm test command; this will just run all the tests from our test battery.

We are including install and test scripts in a common step (we have to add a pipe character to indicate that we are going to run several scripts on the same step), you can as well split it into separate steps.

./.github/workflows/main.yml

...steps:- uses: actions/checkout@v1- uses: actions/setup-node@v1with:node-version: '12.x'- - name: Install+ - name: Install & Tests- run: npm install+ run: |+ npm install+ npm test

8. Log into Docker Hub

Right after all the scripts have been executed, we want to upload the Docker image that we will generate to the Docker Hub Registry.

Github Actions runs over the OS that we had defined in runs_on section. These virtual-environments include commonly-used preinstalled software, this allows us to access docker without the need of running an install docker step.

The first step is to login into the docker hub (we will make use of the environment variables we added into our repository secrets section. See section Linking docker hub credentials in this post).

We will create a new job, the cd (continous delivery) job that:

- it will wait for the previous

ci(continous integration) job to be completed successfully. - Since we are defining a new job, we need checkout the repository again (we are using a fresh instance).

- It will login Docker Hub using the Github contexts previous secrets that we have used:

./.github/workflows/main.yml

...jobs:ci:runs-on: ubuntu-lateststeps:- uses: actions/checkout@v1- uses: actions/setup-node@v1with:node-version: '12.x'- name: Install & Testsrun: |npm installnpm test+ cd:+ runs-on: ubuntu-latest+ needs: ci+ steps:+ - uses: actions/checkout@v1+ - name: Docker login+ run: docker login -u ${{ secrets.DOCKER_USER }} -p ${{ secrets.DOCKER_PASSWORD }}

In the next step we will continue working on the cd job (this time building the Docker Image).

9. Build Docker Image

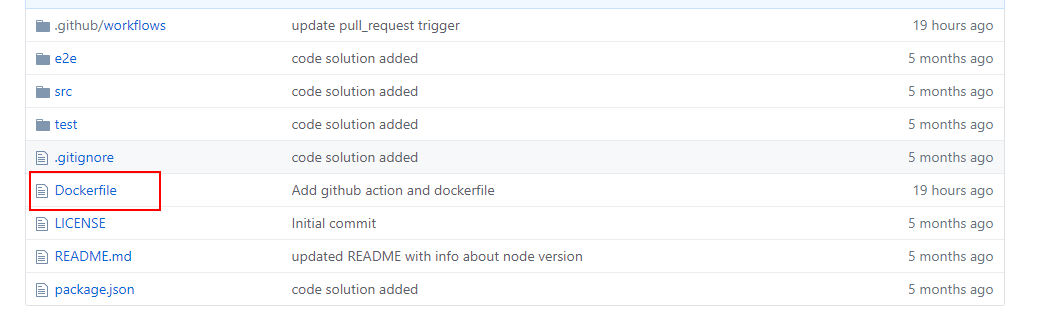

In the previous post we created a Dockerfile configuring the build steps. Let's copy the content of that file and place it at the root of your repository (filename: Dockerfile).

./.Dockerfile

FROM nodeWORKDIR /opt/backCOPY . .RUN npm installEXPOSE 3000ENTRYPOINT ["npm", "start"]

Just as a reminder about this Dockerfile configuration:

- FROM node We're setting up the base image from node Docker Hub image.

- WORKDIR /opt/back We're setting up the work directory on /opt/back.

- COPY . . Copy content in container. The point where it is copied will be on selected working directory /opt/back.

- RUN npm install Add dependencies.

- EXPOSE 3000 Notification about what port is going to expose our app.

- ENTRYPOINT ["npm", "start"] Command to start our container.

Let's jump back into the yml file: inside the cd job, right after the docker login, we add the command to build the Docker Container image.

./.github/workflows/main.yml

...steps:- uses: actions/checkout@v1- name: Docker loginrun: docker login -u ${{ secrets.DOCKER_USER }} -p ${{ secrets.DOCKER_PASSWORD }}+ - name: Build+ run: docker build -t back .

This command will search for the Dockerfile file that we have just created at the root of your backend repository, and follow the steps to build it.

Hey! I've just realized something strange is going on: you are using different containers to get started, Github action runs the test on a given linux instance and the Dockerfile uses another linux / node configuration pulled from the Docker Hub Registry. That's a bad smell, isn't it? You are totally right! Both setup node and the Dockerfile configuration should start from the same image container. We need to make sure that the test runs in the same configuration as we would have in production. The best solution is use the container section inside a job to run an action from docker image like:

jobs:my_job:container:image: node:10.16-jessieenv:NODE_ENV: developmentports:- 80

We could update our configuration and it would be something like:

./.github/workflows/main.yml

...jobs:ci:runs-on: ubuntu-latest+ container:+ image: nodesteps:- uses: actions/checkout@v1- - uses: actions/setup-node@v1- with:- node-version: '12.x'- name: Install & Testsrun: |npm installnpm testcd:runs-on: ubuntu-latestneeds: cisteps:- uses: actions/checkout@v1- name: Docker loginrun: docker login -u ${{ secrets.DOCKER_USER }} -p ${{ secrets.DOCKER_PASSWORD }}- name: Buildrun: docker build -t back .

We will apply this image for the ci step, but for the cd we won't need to set it up since we are just building the Docker image defined in the Dockerfile.

10. Tag Docker Images

The current Docker image that we have generated has the following name: back. In order to upload it to Docker Hub registry, we need to add a more elaborated and unique name:

- Let's prefix it with the docker user name.

- Let's add a suffix with a unique build number (in this case the commit SHA from Github context).

On the other hand, we will indicate that the current image that we have generated is the latest docker image available.

In a real project, this could vary depending on your needs.

./.github/workflows/main.yml

...steps:- uses: actions/checkout@v1- name: Docker loginrun: docker login -u ${{ secrets.DOCKER_USER }} -p ${{ secrets.DOCKER_PASSWORD }}- name: Buildrun: docker build -t back .+ - name: Tags+ run: |+ docker tag back ${{ secrets.DOCKER_USER }}/back:${{ github.sha }}+ docker tag back ${{ secrets.DOCKER_USER }}/back:latest

11. Push Docker Images

Now that we've got unique names, we need to push the Docker Images into the Docker Registry. We will use the docker push command for this.

Note down that first of all we are pushing the ${{ secrets.DOCKER_USER }}/back:${{ github.sha }} image, and then the ${{ secrets.DOCKER_USER }}/back:latest. Doesn't this mean that the image will be uploaded twice? The answer is no. Docker is smart enough to identify that the image is the same, so it will assign two different "names" to the same image in the Docker Repository.

./.github/workflows/main.yml

...steps:- uses: actions/checkout@v1- name: Docker loginrun: docker login -u ${{ secrets.DOCKER_USER }} -p ${{ secrets.DOCKER_PASSWORD }}- name: Buildrun: docker build -t back .- name: Tagsrun: |docker tag back ${{ secrets.DOCKER_USER }}/back:${{ github.sha }}docker tag back ${{ secrets.DOCKER_USER }}/back:latest+ - name: Push+ run: |+ docker push ${{ secrets.DOCKER_USER }}/back:${{ github.sha }}+ docker push ${{ secrets.DOCKER_USER }}/back:latest

The final result

The final main.yml should look like this:

./.github/workflows/main.yml

name: Backend Chat CI/CDon:push:branches:- masterpull_request:branches:- masterjobs:ci:runs-on: ubuntu-latestcontainer:image: nodesteps:- uses: actions/checkout@v1- name: Install & Testsrun: |npm installnpm testcd:runs-on: ubuntu-latestneeds: cisteps:- uses: actions/checkout@v1- name: Docker loginrun: docker login -u ${{ secrets.DOCKER_USER }} -p ${{ secrets.DOCKER_PASSWORD }}- name: Buildrun: docker build -t back .- name: Tagsrun: |docker tag back ${{ secrets.DOCKER_USER }}/back:${{ github.sha }}docker tag back ${{ secrets.DOCKER_USER }}/back:latest- name: Pushrun: |docker push ${{ secrets.DOCKER_USER }}/back:${{ github.sha }}docker push ${{ secrets.DOCKER_USER }}/back:latest

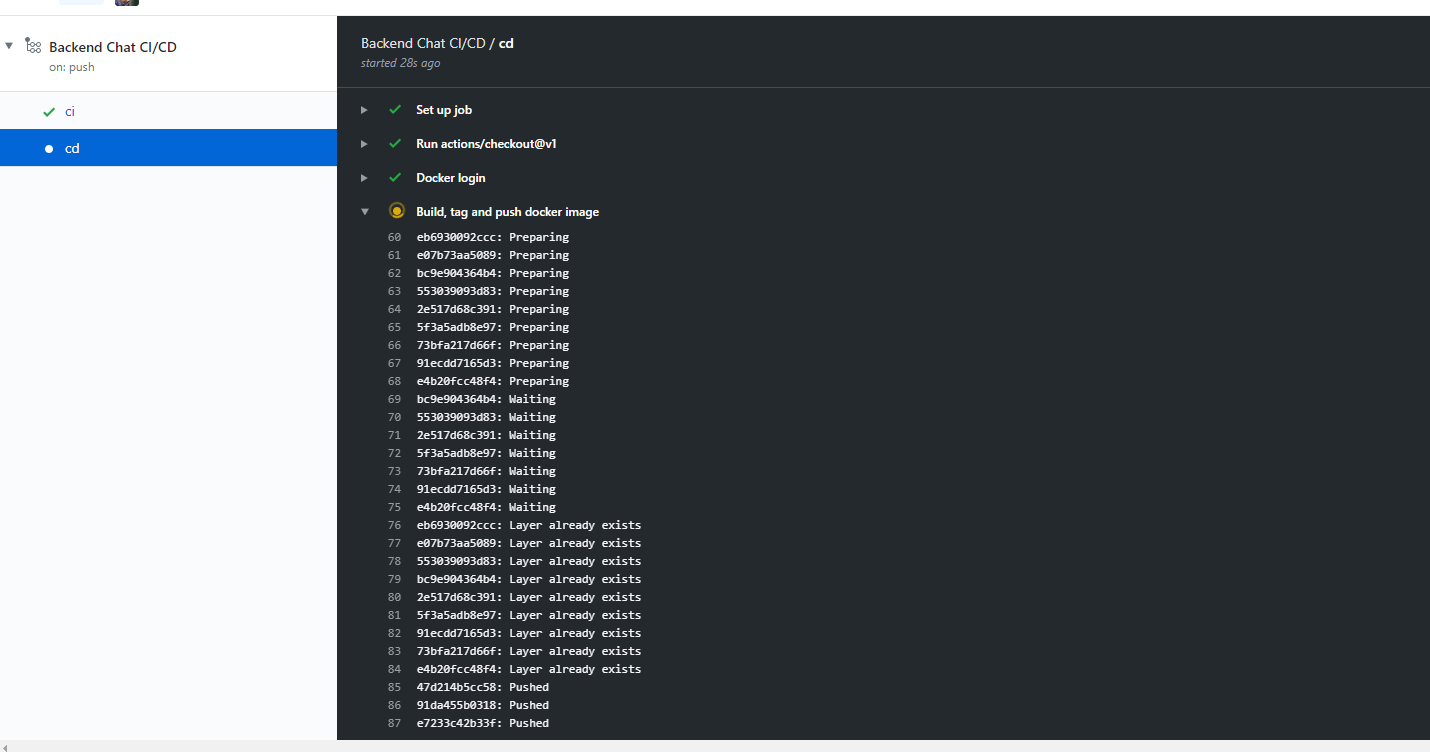

Now if you push all this configuration to Github it will automatically trigger the build .

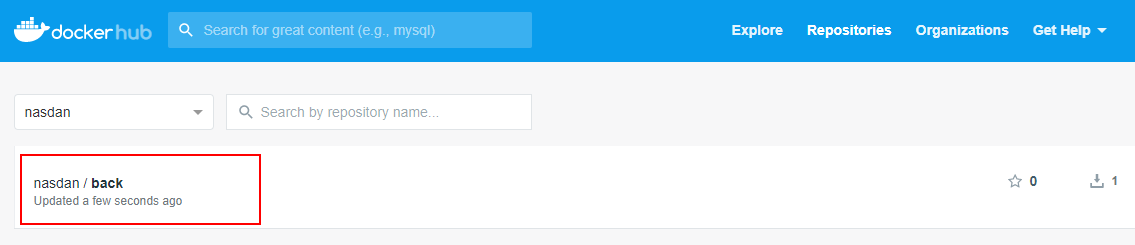

Once finished, you can check if the docker image has been generated successfully:

And we can check if the image is available in our Docker Hub Registry account:

Front End

The steps for creating main.yml are quite similar to the previous one (backend).

- Name the workflow to be created

- Define event triggers

- Define CI job (build and tests)

- Define CD job

- Log into Docker Hub

- Build Docker Image

- Tag Docker Images

- Push Docker Images

1. Name the workflow to be created

Let's define the workflow name:

./.github/workflows/main.yml

+ name: Frontend Chat CI/CD

2. Define event triggers

We will follow a similar approach as in the Back End workflow: We can trigger this workflow on several events like push, pull_request, etc.

In this case we are going to launch the CI/CD process whenever there is a push to master, or Pull Request pointing to master.

./.github/workflows/main.yml

name: Frontend Chat CI/CD+ on:+ push:+ branches:+ - master+ pull_request:+ branches:+ - master

3. Define CI job (build and tests)

Define the ci job:

- We will indicate that we are going to nodejs image from docker (Ubuntu + nodejs) and execute

npm installandnpm testcommands:

./.github/workflows/main.yml

...+ jobs:+ ci:+ runs-on: ubuntu-latest+ container:+ image: node+ steps:+ - uses: actions/checkout@v1+ - name: Install & Tests+ run: |+ npm install+ npm test

4. Define CD job

Create the cd job that will start right after the ci job has been completed succesfully and checkout the repository to get all files inside the running instance.

./.github/workflows/main.yml

...jobs:...+ cd:+ runs-on: ubuntu-latest+ needs: ci+ steps:+ - uses: actions/checkout@v1

5. Log into Docker Hub

The next step is to log into Docker Hub.

./.github/workflows/main.yml

...jobs:...cd:runs-on: ubuntu-latestneeds: cisteps:- uses: actions/checkout@v1+ - name: Docker login+ run: docker login -u ${{ secrets.DOCKER_USER }} -p ${{ secrets.DOCKER_PASSWORD }}

6. Build Docker Image

Let's build the Docker Image.

./.github/workflows/main.yml

...jobs:...cd:runs-on: ubuntu-latestneeds: cisteps:- uses: actions/checkout@v1- name: Docker loginrun: docker login -u ${{ secrets.DOCKER_USER }} -p ${{ secrets.DOCKER_PASSWORD }}+ - name: Build+ run: docker build -t front .

7. Tag Docker Images

As we did with the backend application, we are going to tag the current version using the commit SHA and define it as latest.

./.github/workflows/main.yml

...jobs:...cd:runs-on: ubuntu-latestneeds: cisteps:- uses: actions/checkout@v1- name: Docker loginrun: docker login -u ${{ secrets.DOCKER_USER }} -p ${{ secrets.DOCKER_PASSWORD }}- name: Buildrun: docker build -t front .+ - name: Tags+ run: |+ docker tag front ${{ secrets.DOCKER_USER }}/front:${{ github.sha }}+ docker tag front ${{ secrets.DOCKER_USER }}/front:latest

8. Push Docker Images

Now we only need to push the images.

./.github/workflows/main.yml

...jobs:...cd:runs-on: ubuntu-latestneeds: cisteps:- uses: actions/checkout@v1- name: Docker loginrun: docker login -u ${{ secrets.DOCKER_USER }} -p ${{ secrets.DOCKER_PASSWORD }}- name: Buildrun: docker build -t front .- name: Tagsrun: |docker tag front ${{ secrets.DOCKER_USER }}/front:${{ github.sha }}docker tag front ${{ secrets.DOCKER_USER }}/front:latest+ - name: Push+ run: |+ docker push ${{ secrets.DOCKER_USER }}/front:${{ github.sha }}+ docker push ${{ secrets.DOCKER_USER }}/front:latest

And the final main.yml should be like the following:

./.github/workflows/main.yml

name: Frontend Chat CI/CDon:push:branches:- masterpull_request:branches:- masterjobs:ci:runs-on: ubuntu-latestcontainer:image: nodesteps:- uses: actions/checkout@v1- name: Install & Testsrun: |npm installnpm testcd:runs-on: ubuntu-latestneeds: cisteps:- uses: actions/checkout@v1- name: Docker loginrun: docker login -u ${{ secrets.DOCKER_USER }} -p ${{ secrets.DOCKER_PASSWORD }}- name: Buildrun: docker build -t front .- name: Tagsrun: |docker tag front ${{ secrets.DOCKER_USER }}/front:${{ github.sha }}docker tag front ${{ secrets.DOCKER_USER }}/front:latest- name: Pushrun: |docker push ${{ secrets.DOCKER_USER }}/front:${{ github.sha }}docker push ${{ secrets.DOCKER_USER }}/front:latest

Finally we will include the Dockerfile to the frontend project as we did in previous post:

./.Dockerfile

FROM node AS builderWORKDIR /opt/frontCOPY . .RUN npm installRUN npm run build:prodFROM nginxWORKDIR /var/www/frontCOPY --from=builder /opt/front/dist/ .COPY nginx.conf /etc/nginx/

And include the nginx.conf file:

./nginx.conf

worker_processes 2;user www-data;events {use epoll;worker_connections 128;}http {include mime.types;charset utf-8;server {listen 80;location / {root /var/www/front;}}}

Just as a reminder about this nginx.conf file:

- worker_processes the dedicated number of processes.

- user defines the user that worker processes are going to use.

- events set of directives for connection management.

- epoll efficient method used on linux 2.6+

- worker_connections maximum number of simultaneous connections that can be opened by a worker process.

- http defines the HTTP server directives.

- server in http context defines a virtual server

- listen the port where the virtual server will be listening

- location the path from the files are going to be served. Notice that /var/www/front is the place where we copied the related built files.

Running multi container system

Let's check if our CI configuration is working as expected. First let's make sure that Github Action has run at least one successful build.

You should see in Github Action build that it has been launched for Front End and Back End repos (login into each github repository):

You should see the images available in the docker registry (login into docker hub):

As we did in our previous post, we can launch our whole system using Docker Compose. However, in this case for the Front End and Back End we are going to consume the image containers that we have uploaded to the Docker Hub Registry.

The changes that we are going to introduce to that docker-compose.yml are:

./docker-compose.yml

version: '3.7'services:front:- build: ./container-chat-front-example+ image: <Docker Hub user name>/front:<version>back:- build: ./container-chat-back-example+ image: <Docker Hub user name>/back:<version>lb:build: ./container-chat-lb-exampledepends_on:- front- backports:- '80:80'

So the docker-compose.yml will be like:

version: "3.7"services:front:image: <Docker Hub user name>/front:<version>back:image: <Docker Hub user name>/back:<version>lb:build: ./container-chat-lb-exampledepends_on:- front- backports:- "80:80"

We can launch it:

$ docker-compose up

It will download the back and front images from the Docker Hub Registry (latest available). You can check out how it works by opening your web browser and typing http://localhost/ (more information about how this works in our previous post Hello Docker)

Resources

- Previous Post Docker

- Previous Post Travis

- Front End repository

- Back End repository

- Configuring workflows

- Docker Hub

Wrapping up

By introducing this CI/CD step (CI stands for Continous Integration, CD stands for Continuos Delivery), we've got several benefits:

- The build process gets automated, so we avoid manual errors.

- We can easily deploy different build versions (like in a juke box see diagram).

- We can easily rollback a failed release.

- We can apply A/B testing or have Canary environments.

Gopal Jani

https://www.linkedin.com/in/gopal-jani/

No comments yet. Login to start a new discussion Start a new discussion